Using ConvKB Torch in my project was a game-changer, as its CNN-based approach captured complex relationships in my knowledge graph with impressive accuracy. It seamlessly integrated with PyTorch, making the implementation process smooth and efficient for my AI-driven application.

ConvKB Torch is a deep learning model that uses convolutional neural networks (CNNs) to generate embeddings for entities and relations in knowledge graphs, capturing complex relationships across all dimensions. It outperforms traditional models in tasks like link prediction and entity classification.

This comprehensive guide will cover everything you need to know about ConvKB Torch, including its architecture, features, applications, benefits, installation, and a step-by-step tutorial on how to use it effectively.

What is ConvKB Torch?

ConvKB Torch is a deep learning model that leverages convolutional neural networks (CNNs) to generate embeddings for entities and relations in a knowledge graph. Unlike traditional KG embedding methods, which rely on vector dot products or distance-based scoring functions, ConvKB captures global interactions across all dimensions using convolutional filters.

Key Features of ConvKB Torch

✔ CNN-Based Approach – Uses convolutional layers to capture rich relationships between entities.

✔ High Scalability – Works efficiently with large-scale knowledge graphs.

✔ PyTorch Integration – Fully compatible with PyTorch for easy implementation and experimentation.

✔ State-of-the-Art Performance – Outperforms traditional models in link prediction and entity classification.

What performance metrics can be used to evaluate ConvKB Torch?

Accuracy

Accuracy is a fundamental performance metric used to evaluate ConvKB Torch. It measures the proportion of correctly predicted triplets out of the total number of predictions made.

Higher accuracy indicates that the model is effectively capturing the relationships in the knowledge graph and correctly predicting missing links or entity classifications. It’s particularly useful for evaluating overall model performance, especially in tasks like entity prediction.

Mean Reciprocal Rank (MRR)

MRR is a metric used to evaluate the rank of the first correct prediction in a ranked list of possible answers. In the context of ConvKB Torch, it measures how well the model ranks true triplets relative to false ones.

The higher the MRR score, the better the model is at placing correct answers near the top of its predicted list. It is widely used for evaluating models in tasks like link prediction and information retrieval.

Hit@k

Hit@k is another important evaluation metric that checks if the correct entity appears in the top-k positions of the model’s predictions. For example, Hit@1 measures whether the correct entity is ranked first, while Hit@10 evaluates if it’s in the top 10 predictions.

This metric is especially useful when evaluating the effectiveness of models in tasks like knowledge graph completion and recommendation systems, where the focus is on retrieving the most relevant entities.

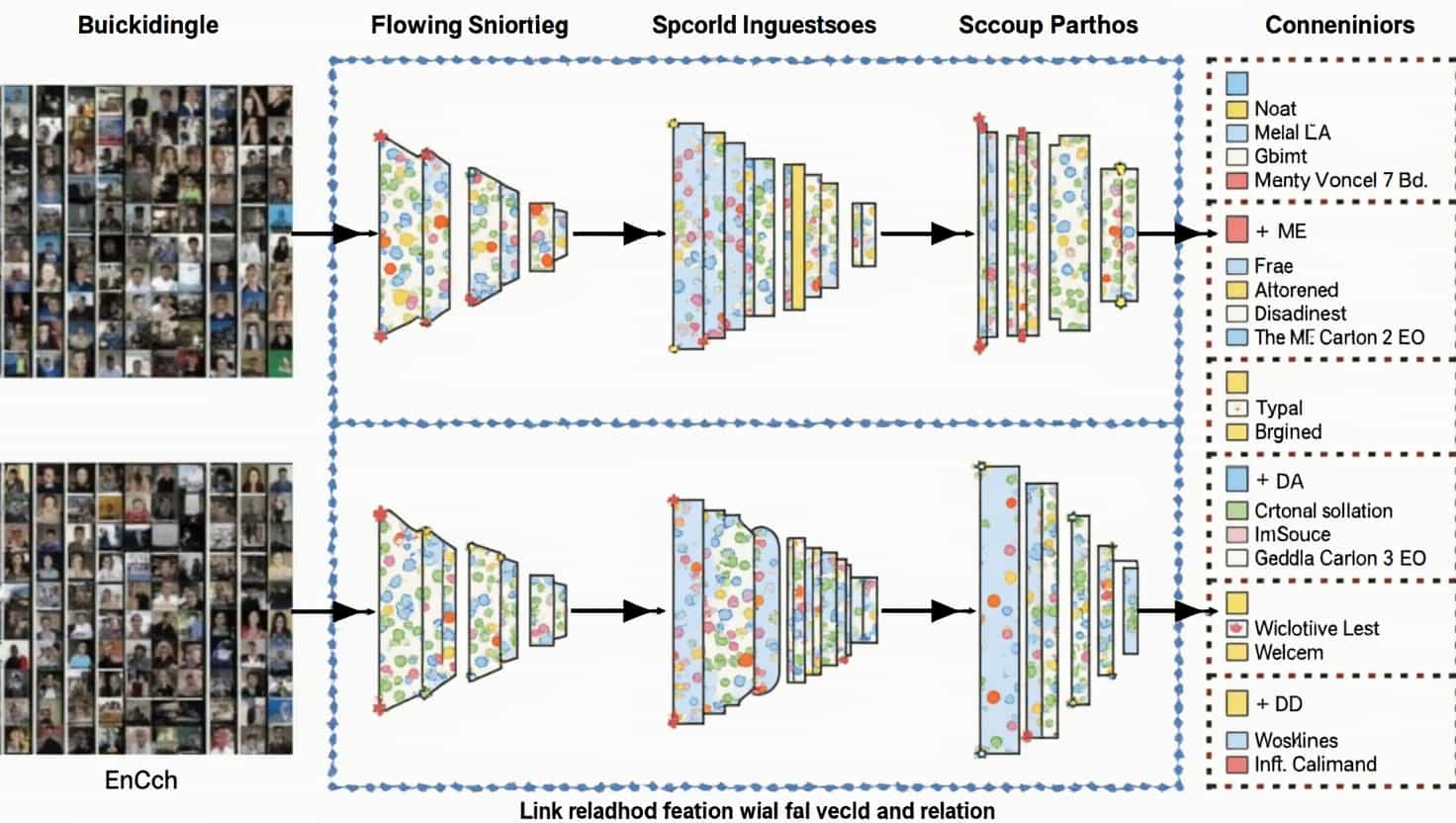

How ConvKB Torch Works: A Deep Dive into its Architecture

ConvKB Torch is built on the CNN framework, but instead of processing images, it operates on knowledge triplets (head, relation, tail). Here’s how it works:

- Embedding Layer

- Each entity (E) and relation (R) in the KG is mapped to a d-dimensional vector.

- Example triplet: (Paris, CapitalOf, France) → Embeddings: (E_Paris, R_CapitalOf, E_France)

- Convolutional Layer

- The embedding triplet is combined into a 3D tensor.

- Convolutional filters slide over the tensor, extracting meaningful features.

- Fully Connected Layer & Score Function

- The extracted features are passed through a fully connected neural network.

- A score function determines the plausibility of the triplet.

- Loss Function & Training

- The model is optimized using a margin-based ranking loss.

- The goal is to maximize the score for true triplets and minimize it for false ones.

Read also: EtrueGames Gaming Updates from EtrueSports – Gamer’s Esports Guide

Can ConvKB Torch be fine-tuned for specific tasks or datasets?

Yes, ConvKB Torch can be fine-tuned for specific tasks or datasets. Fine-tuning is done by adjusting hyperparameters, modifying the architecture, or using task-specific loss functions to optimize the model for a particular application.

For example, you can adapt ConvKB Torch for tasks like link prediction, entity classification, or even specialized applications like recommendation systems. This flexibility allows ConvKB Torch to perform well across diverse domains and datasets.

Comparison with Traditional KG Embedding Models

| Model | Architecture | Strengths | Weaknesses |

| TransE | Translation-based | Simple, fast | Struggles with 1-to-N relations |

| DistMult | Bilinear model | Effective for symmetric relations | Poor at modeling asymmetric relations |

| ConvKB Torch | CNN-based | Captures complex interactions | Requires more computation |

Applications of ConvKB Torch

ConvKB Torch is used in various AI-driven applications:

- Knowledge Graph Completion

- Predicts missing links between entities (e.g., “Who is the CEO of Apple?”).

- Question Answering Systems

- Improves the accuracy of AI chatbots and search engines.

- Recommendation Systems

- Enhances personalized recommendations in e-commerce and streaming platforms.

- Biomedical Research

- Helps in drug discovery by analyzing relationships between genes, proteins, and diseases.

Read also: Hilltop Auto Gordon – Automotive Excellence Guaranteed

How does ConvKB Torch handle unseen or new entities during inference?

ConvKB Torch relies on embedding representations for entities and relations, which are learned during training. When new or unseen entities appear during inference, they need to be embedded into the same vector space as the existing entities.

This can be done by utilizing pretrained embeddings or incorporating techniques like knowledge graph expansion to generate embeddings for new entities. However, handling these entities effectively requires ensuring that the embeddings reflect the relationships in the knowledge graph as closely as possible.

Does ConvKB Torch Support Multi-Relational Datasets?

Yes, ConvKB Torch fully supports multi-relational knowledge graphs, making it well-suited for datasets that involve multiple types of relations between entities. The model leverages convolutional layers to extract complex, high-level patterns across all types of relations, allowing it to effectively handle multi-relational data. This capability is particularly valuable when dealing with knowledge graphs that contain diverse, interlinked entities with various relationship types.

- Multiple Relation Types: ConvKB Torch can handle different kinds of relations between entities, such as “is_a,” “located_in,” or “has_part.”

- Complex Pattern Recognition: The convolutional filters can learn and capture complex interactions across different relation types in the graph.

- Versatility: This makes ConvKB Torch versatile, ideal for a wide range of applications, from recommendation systems to semantic search, where multiple relationships are involved.

- Enhanced Model Performance: By processing multi-relational data, the model can generate richer embeddings and improve prediction accuracy.

Read also: Online Event of the Year TheHaKEvent – A Comprehensive Guide

How to Install and Use ConvKB Torch

To start using ConvKB Torch, follow these simple steps:

Step 1: Install PyTorch

Since ConvKB Torch is built on PyTorch, install it first:

bash

CopyEdit

pip install torch torchvision torchaudio

Step 2: Install ConvKB Torch

Download the ConvKB Torch implementation:

bash

CopyEdit

git clone https://github.com/daiquocnguyen/ConvKB.git

cd ConvKB

pip install -r requirements.txt

Step 3: Load a Pre-Trained Model

python

CopyEdit

import torch

from ConvKB.model import ConvKB

model = ConvKB(num_entities=50000, num_relations=100, embedding_dim=200)

model.load_state_dict(torch.load(‘pretrained_model.pth’))

model.eval()

Step 4: Train a Custom Model

python

CopyEdit

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

loss_function = torch.nn.MarginRankingLoss(margin=1.0)

# Sample training loop

for epoch in range(10):

optimizer.zero_grad()

loss = loss_function(model(training_data), labels)

loss.backward()

optimizer.step()

Step 5: Evaluate Performance

python

CopyEdit

accuracy = model.evaluate(test_data)

print(f”Model Accuracy: {accuracy * 100:.2f}%”)

Advantages of Using ConvKB Torch

✔ Captures Complex Relationships – CNNs extract high-level patterns in knowledge graphs.

✔ Better Generalization – Learns richer embeddings compared to traditional models.

✔ Easy Integration – Works seamlessly with PyTorch, TensorFlow, and other AI tools.

✔ Scalable – Can be trained on large-scale datasets efficiently.

What types of knowledge graphs are best suited for ConvKB Torch?

ConvKB Torch is ideal for large, dense, and structured knowledge graphs that contain complex relationships across multiple entities. It excels in domains such as biomedical research, social networks, and e-commerce, where rich, multifaceted interactions are present.

It works especially well with graphs involving various types of relations, where deep learning’s ability to capture nuanced patterns and interactions can provide significant benefits in tasks like link prediction and entity classification.

Read also: Whose Fault Is It if You Trip and Fall on a Pothole?

Challenges & Limitations

While ConvKB Torch is powerful, it has some limitations:

🚩 Computational Cost – Requires higher GPU resources compared to simpler models.

🚩 Data Sparsity Issues – Performance drops with incomplete knowledge graphs.

🚩 Hyperparameter Tuning – Needs extensive tuning for optimal results.

How to Overcome These Challenges

✔ Use pretrained embeddings to reduce training time.

✔ Optimize hyperparameters using grid search.

✔ Implement data augmentation techniques to improve learning.

Read also: Golfers LPGA Players in the Buff – Athleticism, Empowerment, Positivity

FAQs About ConvKB Torch

1. What makes ConvKB Torch different from other KG embedding models?

ConvKB Torch uses CNNs to extract global relationships in knowledge graphs, whereas traditional models use vector arithmetic.

2. Can ConvKB Torch be used with real-world applications?

Yes! It is widely used in search engines, chatbots, and biomedical research.

3. How much computational power is needed?

ConvKB Torch requires a GPU for training large datasets but can run on a CPU for small-scale applications.

4. Is ConvKB Torch beginner-friendly?

While the concept is advanced, PyTorch integration makes it easier to implement for those with basic Python knowledge.

5. Where can I find ConvKB Torch documentation?

You can refer to the GitHub repository here or check the PyKEEN documentation.

Final Thoughts

ConvKB Torch is a game-changer for knowledge graph embeddings, bringing deep learning advancements into structured data analysis. Whether you’re a data scientist, AI researcher, or developer, mastering ConvKB Torch can elevate your machine learning projects.

If you’re looking to build next-gen AI applications, ConvKB Torch is an excellent choice for relation extraction, semantic search, and recommendation systems.

💡 Want to start now? Install ConvKB Torch and build your first KG model today! 🚀

Read more: